Most things are complicated, even things that appear rather simple.

Take the toilet as an example. As a thought experiment, would you be able to explain to someone else how a toilet works?

If you’re fumbling for an answer, you’re not alone. Most people cannot either.

This not just a party trick. Psychologists have used several means to discover the extent of our ignorance.

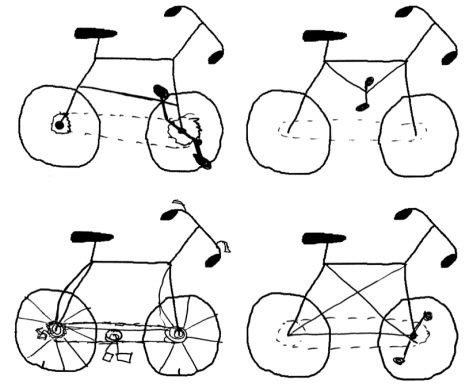

For example, Rebecca Lawson at the University of Liverpool presented people with a drawing of a bicycle which had several components missing. They were asked to fill in the drawing with the missing parts.

Sounds easy, right? Apparently not.

Nearly half of the participants were unable to complete the drawings correctly. Also, people didn’t do much better when they were presented with completed drawings and asked to identify the correct one.

To a greater or lesser extent, we all suffer from an illusion of understanding. That is, we think we understand how the world works when our understanding is rudimentary.

In their new book The Knowledge Illusion, cognitive scientists Steven Sloman and Philip Fernbach explore how we humans know so much, despite our individual ignorance.

Thinking is for action

To appreciate our mental limitations, we first need to ask ourselves: what is the purpose of the human brain?

The authors note there is no shortage of of explanations of what the human mind evolved for. For example, there are those who argue the mind evolved to support language, or that it is adapted for social interactions, hunting, or acclimatising to changing climates. “[…] [T]hey are all probably right because the mind actually evolved to do something more general than any of them… Namely, the mind evolved to support our ability to act effectively.”

This more general explanation is important, as it helps establish why we don’t retain all the information we receive.

The reason we’re not all hyperthymesics is that it would make us less successful at what we’ve evolved to do. The mind is busy trying to choose actions by picking out the most useful stuff and leaving the rest behind. Remembering everything gets in the way of focusing on the deeper principles that allow us to recognize how a new situation resembles past situations and what kind of actions will be effective.

The authors argue the mind is not like a computer. Instead, the mind is a flexible problem solver that stores the most useful information to aid survival and reproduction. Storing superficial details is often unnecessary, and at times counterproductive.

Community of knowledge

Evidently, we would not do very well if we relied solely on our individual knowledge. We may consider ourselves highly intelligent, yet we wouldn’t survive very long if we found ourselves alone in the wilderness. So how do we survive and thrive, despite our mental limitations?

The authors argue the secret of our success is our ability to collaborate and share knowledge.

[W]e collaborate. That’s the major benefit of living in social groups, to make it easy to share our skills and knowledge. It’s not surprising that we fail to identify what’s in our heads versus what’s in others’, because we’re generally- perhaps always- doing things that involve both. Whether either of us washes dishes, we thank heaven that someone knows how to make dish soap and someone else knows how to provide warm water from a faucet. We wouldn’t have a clue.

One of the most important ingredients of humanity’s success is cumulative culture— our ability to store and transmit knowledge, enabled by our hyper-sociality and cooperative skills. This fundamental process is known as cultural evolution, and is outlined eloquently in Joe Henrich’s book The Secret of Our Success.

Throughout The Knowledge Illusion, the metaphor of a beehive is used to describe our collective intelligence. “[…][P]eople are like bees and society a beehive: Our intelligence resides not in individual brains but in the collective mind.” However, the authors highlight that unlike beehives which have remained largely the same for millions of years, our shared intelligence is becoming more powerful and our collective pursuits are growing in complexity.

Collective intelligence

In psychology, intelligence has largely been confined to ranking individuals according to cognitive ability. The authors argue psychologists like general intelligence as it’s readily quantifiable, and has some power to predict important life outcomes. For example, people with higher IQ scores do better academically and perform better at their jobs.

Whilst there’s a wealth of evidence in favour of general intelligence, Sloman and Fernbach argue that we may be thinking about intelligence in the wrong way. “Awareness that knowledge lives in a community gives us a different way to conceive of intelligence. Instead of regarding intelligence as a personal attribute, it can be understood as how much an individual contributes to the community.”

A key argument is that groups don’t need a lot of intelligent people to succeed, but rather a balance of complimentary attributes and skill-sets. For example to run a company, you need some people who are cautious and others who are risk takers; some who are good with numbers and others who are good with people.

For this reason, Sloman and Fernbach stress the need to measure group performance, rather than individual intelligence. “Whether we’re talking about a team of doctors, mechanics, researchers, or designers, it is the group that makes the final product, not any one individual.”

A team led by Anita Woolley at the Tepper School of Business have begun devising ways of measuring collective intelligence, with some progress made. The idea of measuring collective intelligence is new, and many questions remain. However, the authors contend that the success of a group is not predominantly a function of the intelligence of individual members, but rather how well they work together.

Committing to the community

Despite all the benefits of our communal knowledge, it also has dangerous consequences. The authors argue believing we understand more than we do is the source of many of society’s most pressing problems.

Decades worth of research shows significant gap between what science knows, and what the public believes. Many scientists have tried addressing this deficit by providing people with more factual information. However, this approach has been less than successful.

For example, Brendan Nyhan’s experiments into vaccine opposition illustrated that factual information did not make people more likely to vaccinate their children. Some of the information even backfired– providing parents stories of children who contracted measles were more likely to believe that vaccines have serious side effects.

Similarly, the illusion of understanding helps explains the political polarisation we’ve witnessed in recent times.

In the hope of reducing political polarisation, Sloman and Fernbach conducted experiments to see whether asking people to explain their causal understanding of a given topic would make them less extreme. Although they found doing so for non-controversial matters did increase openness and intellectual humility, the technique did not work on highly charged political issues, such as abortion or assisted suicide.

Viewing knowledge as embedded in communities helps explain why these approaches don’t work. People tend to have a limited understanding of complex issues, and have trouble absorbing details. This means that people do not have a good understanding of what they know, and they rely heavily on their community for the basis of their beliefs. This produces passionate, polarised attitudes that are hard to change.

Despite having little to no understanding of complicated policy matters such as U.K. membership of the European Union or the American healthcare system, we feel sufficiently informed about such topics. More than this, we even feel righteous indignation when people disagree with us. Such issues become moralised, where we defend the position of our in-groups.

As stated by Sloman and Fernbach (emphasis added):

[O]ur beliefs are not isolated pieces of data that we can take and discard at will. Instead, beliefs are deeply intertwined with other beliefs, shared cultural values, and our identities. To discard a belief means discarding a whole host of other beliefs, forsaking our communities, going against those we trust and love, and in short, challenging our identities. According to this view, is it any wonder that providing people with a little information about GMOs, vaccines, or global warming have little impact on their beliefs and attitudes? The power that culture has over cognition just swamps these attempts at education.

This effect is compounded by the Dunning-Kruger effect: the unskilled just don’t know what they don’t know. This matters, because all of us are unskilled in most domains of our lives.

According to the authors, the knowledge illusion underscores the important role experts play in society. Similarly, Sloman and Fernbach emphasise the limitations of direct democracy– outsourcing decision making on complicated policy matters to the general public. “Individual citizens rarely know enough to make an informed decision about complex social policy even if they think they do. Giving a vote to every citizen can swamp the contribution of expertise to good judgement that the wisdom of crowds relies on.”

They defend charges that their stance is elitist, or anti-democratic. “We too believe in democracy. But we think that the facts about human ignorance provide an argument for representative democracy, not direct democracy. We elect representatives. Those representatives should have the time and skill to find the expertise to make good decisions. Often they don’t have the time because they’re too busy raising money, but that’s a different issue.”

Nudging for better decisions

By understanding the quirks of human cognition, we can design environments so that these psychological quirks help us rather than hurt us. In a nod to Richard Thaler and Cass Sunstein’s philosophy of libertarian paternalism, the authors provide some nudges to help people make better decisions:

1. Reduce complexity

Because much of our knowledge is possessed by the community and not by us individually, we need to radically scale back our expectations of how much complexity people can tolerate. This seems pertinent for what consumers are presented with during high-stakes financial decisions.

2. Simple decision rules

Provide people rules or shortcuts that perform well and simplify the decision making process.

For example, the financial world is just too complicated and people’s abilities too limited to fully understand it.

Rather than try to educate people, we should give them simple rules that can be applied with little knowledge or effort– such as ‘save 15% of your income’, or ‘get a fifteen-year mortgage if you’re over fifty’.

3. Just-in-time education

The idea is to give people information just before they need to use it. For example, a class in secondary school that reaches the basics of managing debt and savings is not that helpful.

Giving people information just before they use it means they have the opportunity to practice what they have just learnt, increasing the change that it is retained.

4. Check your understanding

What can individuals do to help themselves? A starting point is to be aware of our tendency to be explanation foes.

It’s not practical to master all details of every decision, but it can be helpful to appreciate the gaps in our understanding.

If the decision is important enough, we may want to gather more information before making a decision we may later regret.

Written by Max Beilby for Darwinian Business

Click here to buy a copy of The Knowledge Illusion

References

Fernbach, P. M., Rogers, T., Fox, C. R., & Sloman, S. A. (2013). Political extremism is supported by an illusion of understanding. Psychological Science, 24(6), 939-946.

Haidt, J. (2012) The Righteous Mind: Why good people are divided by politics and religion. Pantheon.

Henrich, J. (2016). The Secret of Our Success: How culture is driving human evolution, domesticating our species, and making us smarter. Princeton University Press.

Kuncel, N. R., Hezlett, S. A., & Ones, D. S. (2004). Academic performance, career potential, creativity, and job performance: Can one construct predict them all? Journal of Personality and Social Psychology, 86(1), 148-161.

Lawson, R. (2006). The science of cycology: Failures to understand how everyday objects work. Memory & Cognition, 34(8), 1667-1675.

Nyhan, B., Reifler, J., Richey, S., & Freed, G. L. (2014). Effective messages in vaccine promotion: a randomized trial. Pediatrics, 133(4), e835-e842.

Sunstein, C., & Thaler, R. (2008). Nudge: Improving decisions about health, wealth and happiness. New Haven.

Thaler, R. H. (2013). Financial literacy, beyond the classroom. The New York Times.

Woolley, A. W., Chabris, C. F., Pentland, A., Hashmi, N., & Malone, T. W. (2010). Evidence for a collective intelligence factor in the performance of human groups. Science, 330(6004), 686-688.

3 thoughts on “The Illusion of Knowledge”